Using Confidence to Identify Risks

Rather than starting the search for risks in a negative way, Quarkside recommends using the reverse psychology to ask about success – “How confident are you that targets will be met?” Asking about confidence levels not only helps to identify risks, it shows a positive view rather than a negative one. Simple bar charts can be used to show targets, changes of opinion and alerts for areas of concern felt by staff.

Average Confidence

The chart above may look gloomy, but this was the state of play in a large public sector project. It was the result of interviewing many levels of staff.

- Anything below 50% confidence is worth further investigation and should be entered in the risk log. Immediate risk reduction action should be taken.

- For intermediate levels of confidence, say between 50% and 75%, risk log entries should reflect the reduced level of risk. The root cause for reduced confidence should be investigated.

- Even if there is high confidence of success, greater than 75%, then there should be a risk log entry if the impact of failure is high.

Without claiming intellectual rigour,

Risk Probability% = 100% – Confidence%

Managers are comfortable with this concept – high confidence equates to low risk and vice versa. Discussion helps people to accept that simple quantification of risks is neither difficult nor threatening.

Confidence Management Process

Confidence Management Process

It may be old-fashioned, but Quarkside is a proponent of managing to a baseline, or vision or goal or whatever you want to call it. Lets also call them strategic objectives. The main point is that they are organisation wide, and that leadership has ensured that everybody understands and has bought into them.

Interviews are carried out using a one-page questionnaire that records levels of confidence. A five-point scale ranges from totally confident to minimally confident. Subsequently, values from 90% to 10% are allocated. The analyst can also select extreme values, say 100% or 0%, if the interviewee stresses strong opinions during the course of an interview. Comments on the reasons for low values are welcomed – highlighting the root cause of a risk if raised by several interviewees.

To encourage open and frank responses, an independent interviewer asks questions in confidence and ensures that comments are not attributable to a specific person. Interview data is analysed and presented in a report. The contents include commentary on areas of high and low confidence and references to the risk log.

After several months second and subsequent reports discuss the change in confidence levels since the previous report. A change chart graphically indicates the effect of risk reduction since the previous review. The process provides feedback into the risk management control loop.

Most importantly it supports the risk management process by flushing out risks that may not have been formalised. In extreme circumstances, it could contribute to a decision to change the baseline business or project targets.

Experience

The method has shown benefits in £billion programmes – but it could be applied in any form of project – even Agile ones. Some key findings were:

- Confidential, non-attributable interviews help to open up discussions and identify root causes of problems. It allows comment at peer level that might not surface in the presence of overbearing managers

- The initial interview requires a few minutes to explain the concepts and establish understanding of the business objectives. Subsequent interviews are quicker to execute and frank answers are obtained in less than one hour.

- The questioning technique encourages managers to think more quantitatively about business targets and the probability of achieving them. They feel comfortable that 90% confidence has a residual 10% risk, and that it is fair to include it in a risk log.

- Levels of confidence can diverge extremely between interviewees. Whether lack of communication or “head in sand”, it is useful data worthy of further investigation.

- In programmes experiencing difficulties, the results provide a focus for debate at board level. One organisation used the results to renegotiate a major contract.

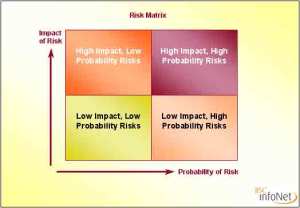

- Even with generally satisfactory levels of confidence, it is worth investigating the target with the lowest confidence. One internal audit team raised a security risk with an impact greater than £1 billion; procedures were tightened. This is the company-threatening risk that is missed by using traditional risk matrices and resulted in the Risk Index to be described in the final section.

Looking to the future, the method should be used on all public sector programmes that rely on computer information for success eg Universal Credit, Health Service ICT, Individual Electoral Registration, the Government ICT Strategy and Identity Management.